ViRel: Unsupervised Visual Relations Discovery with Graph-level Analogy

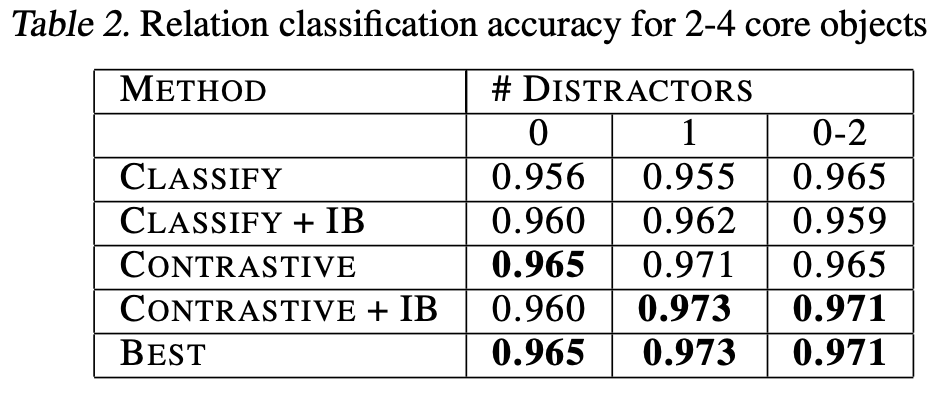

We introduce Visual Relations with graph-level analogy (ViRel), a method for unsupervised discovery and learning of visual relations with graph-level analogy. In a grid-world based dataset that test visual relation reasoning, it achieves above 95% accuracy in unsupervised relation classification, discovers the relation graph structure for most tasks, and further generalizes to unseen tasks with more complicated relational structures.

Method

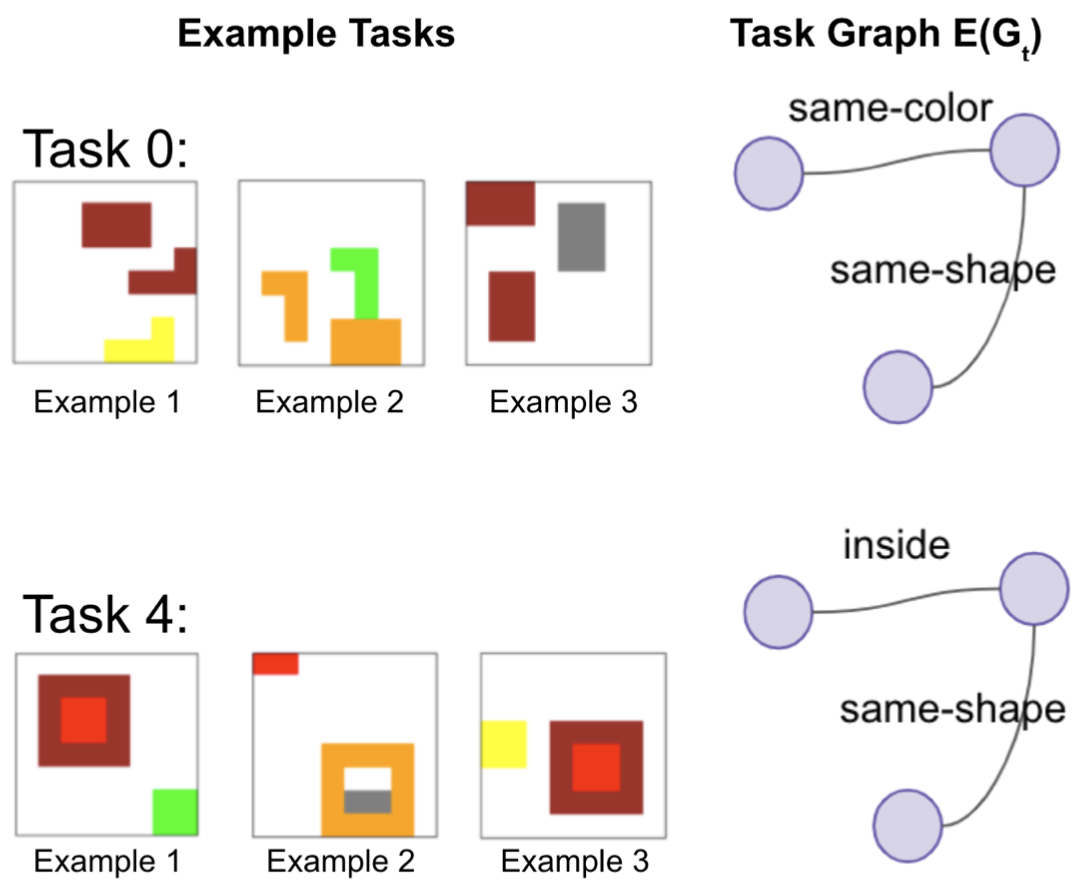

Visual relations form the basis of understanding our compositional world, as relationships between visual objects capture key information in a scene. It is then advantageous to learn relations automatically from the data, as learning with predefined labels cannot capture all possible relations. However, current relation learning methods typically require supervision, and are not designed to generalize to scenes with more complicated relational structures than those seen during training.Here, we introduce ViRel, a method for unsupervised discovery and learning of Visual Relations with graph-level analogy. The following figure illustrates the task setting. Each task t contains images with a shared relation subgraph $E(G_t)$. The input are image examples under "Example Tasks". Both the relation types and the graph of each task are unknown. The goal is to infer the relation graph $E(G_i)$, the corresponding relations, and the shared relation graph $E(G_t)$ for each task t. The task is challenging since we want to infer both the underlying global visual relations (e.g. "inside", "same-shape") and the shared relation graph from raw pixels of a few images.

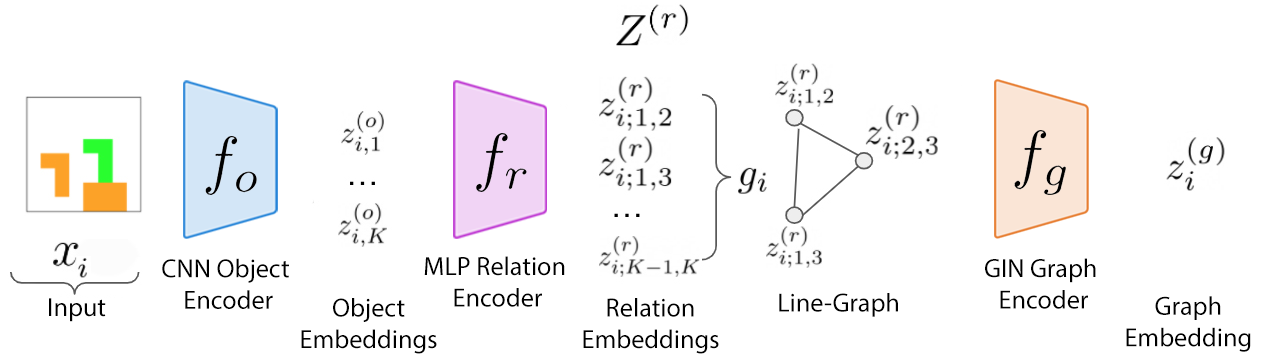

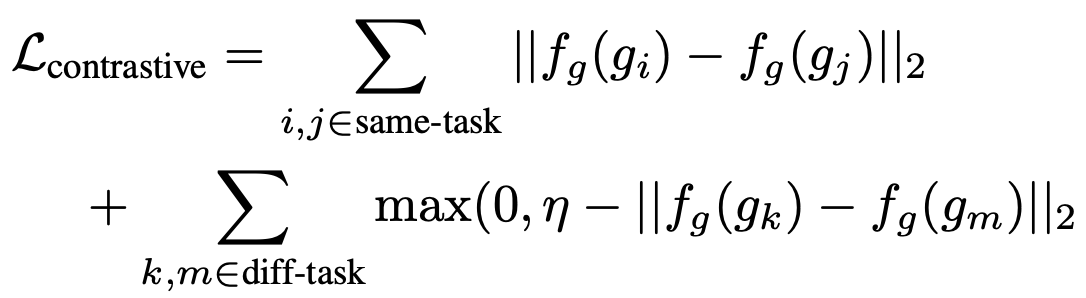

To address this challenging task, our method ViRel contrasts isomorphic and non-isomorphic graphs to discover the relations across tasks in an unsupervised manner, with the following architecture and contrastive loss.

Once the relations are learned, ViRel can then retrieve the shared relational graph structure for each task by parsing the predicted relational structure. Using a dataset based on grid-world and the Abstract Reasoning Corpus, we show that our method achieves above 95% accuracy in relation classification, discovers the relation graph structure for most tasks, and further generalizes to unseen tasks with more complicated relational structures. An example result is shown below:

For more information and results, please see the paper.

Code

A reference implementation of ViRel in PyTorch will be available on GitHub.Datasets

The datasets used by ViRel are included and can be generated in the code repository.Contributors

The following people contributed to ViRel:Daniel Zeng*

Tailin Wu*

Jure Leskovec