|

SNAP Library 4.1, Developer Reference

2018-07-26 16:30:42

SNAP, a general purpose, high performance system for analysis and manipulation of large networks

|

|

SNAP Library 4.1, Developer Reference

2018-07-26 16:30:42

SNAP, a general purpose, high performance system for analysis and manipulation of large networks

|

Go to the source code of this file.

Functions | |

| void | LearnVocab (TVVec< TInt, int64 > &WalksVV, TIntV &Vocab) |

| void | InitUnigramTable (TIntV &Vocab, TIntV &KTable, TFltV &UTable) |

| int64 | RndUnigramInt (TIntV &KTable, TFltV &UTable, TRnd &Rnd) |

| void | InitNegEmb (TIntV &Vocab, const int &Dimensions, TVVec< TFlt, int64 > &SynNeg) |

| void | InitPosEmb (TIntV &Vocab, const int &Dimensions, TRnd &Rnd, TVVec< TFlt, int64 > &SynPos) |

| void | TrainModel (TVVec< TInt, int64 > &WalksVV, const int &Dimensions, const int &WinSize, const int &Iter, const bool &Verbose, TIntV &KTable, TFltV &UTable, int64 &WordCntAll, TFltV &ExpTable, double &Alpha, int64 CurrWalk, TRnd &Rnd, TVVec< TFlt, int64 > &SynNeg, TVVec< TFlt, int64 > &SynPos) |

| void | LearnEmbeddings (TVVec< TInt, int64 > &WalksVV, const int &Dimensions, const int &WinSize, const int &Iter, const bool &Verbose, TIntFltVH &EmbeddingsHV) |

| Learns embeddings using SGD, Skip-gram with negative sampling. More... | |

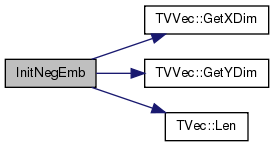

Definition at line 73 of file word2vec.cpp.

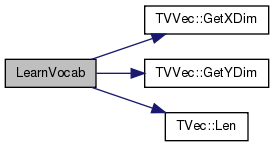

References TVVec< TVal, TSizeTy >::GetXDim(), TVVec< TVal, TSizeTy >::GetYDim(), and TVec< TVal, TSizeTy >::Len().

Referenced by LearnEmbeddings().

| void InitPosEmb | ( | TIntV & | Vocab, |

| const int & | Dimensions, | ||

| TRnd & | Rnd, | ||

| TVVec< TFlt, int64 > & | SynPos | ||

| ) |

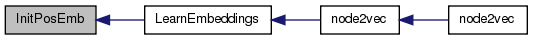

Definition at line 83 of file word2vec.cpp.

References TRnd::GetUniDev(), TVVec< TVal, TSizeTy >::GetXDim(), TVVec< TVal, TSizeTy >::GetYDim(), and TVec< TVal, TSizeTy >::Len().

Referenced by LearnEmbeddings().

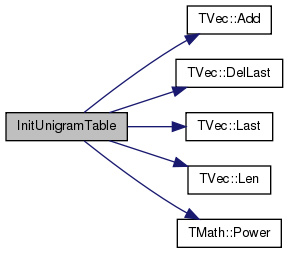

Definition at line 18 of file word2vec.cpp.

References TVec< TVal, TSizeTy >::Add(), TVec< TVal, TSizeTy >::DelLast(), TVec< TVal, TSizeTy >::Last(), TVec< TVal, TSizeTy >::Len(), and TMath::Power().

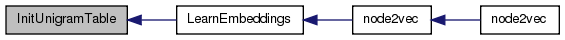

Referenced by LearnEmbeddings().

| void LearnEmbeddings | ( | TVVec< TInt, int64 > & | WalksVV, |

| const int & | Dimensions, | ||

| const int & | WinSize, | ||

| const int & | Iter, | ||

| const bool & | Verbose, | ||

| TIntFltVH & | EmbeddingsHV | ||

| ) |

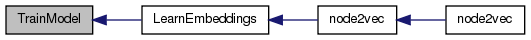

Learns embeddings using SGD, Skip-gram with negative sampling.

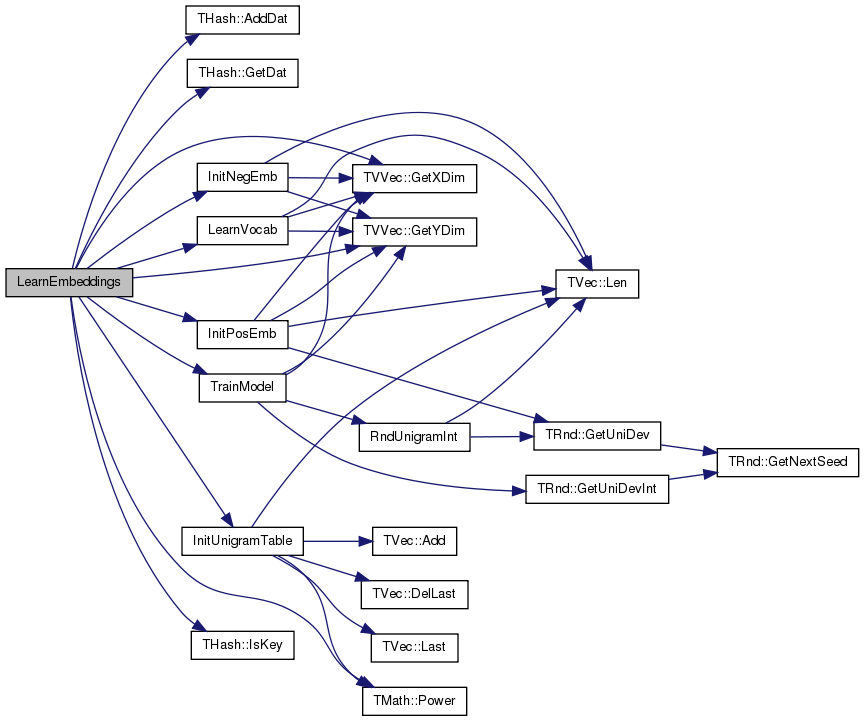

Definition at line 160 of file word2vec.cpp.

References THash< TKey, TDat, THashFunc >::AddDat(), TMath::E, ExpTablePrecision, THash< TKey, TDat, THashFunc >::GetDat(), TVVec< TVal, TSizeTy >::GetXDim(), TVVec< TVal, TSizeTy >::GetYDim(), InitNegEmb(), InitPosEmb(), InitUnigramTable(), THash< TKey, TDat, THashFunc >::IsKey(), LearnVocab(), MaxExp, TMath::Power(), StartAlpha, TableSize, and TrainModel().

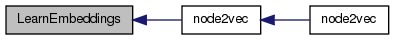

Referenced by node2vec().

Definition at line 8 of file word2vec.cpp.

References TVVec< TVal, TSizeTy >::GetXDim(), TVVec< TVal, TSizeTy >::GetYDim(), and TVec< TVal, TSizeTy >::Len().

Referenced by LearnEmbeddings().

Definition at line 66 of file word2vec.cpp.

References TRnd::GetUniDev(), and TVec< TVal, TSizeTy >::Len().

Referenced by TrainModel().

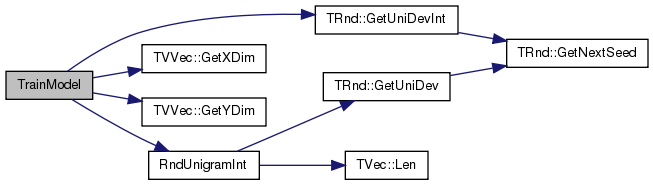

| void TrainModel | ( | TVVec< TInt, int64 > & | WalksVV, |

| const int & | Dimensions, | ||

| const int & | WinSize, | ||

| const int & | Iter, | ||

| const bool & | Verbose, | ||

| TIntV & | KTable, | ||

| TFltV & | UTable, | ||

| int64 & | WordCntAll, | ||

| TFltV & | ExpTable, | ||

| double & | Alpha, | ||

| int64 | CurrWalk, | ||

| TRnd & | Rnd, | ||

| TVVec< TFlt, int64 > & | SynNeg, | ||

| TVVec< TFlt, int64 > & | SynPos | ||

| ) |

Definition at line 92 of file word2vec.cpp.

References ExpTablePrecision, TRnd::GetUniDevInt(), TVVec< TVal, TSizeTy >::GetXDim(), TVVec< TVal, TSizeTy >::GetYDim(), MaxExp, NegSamN, RndUnigramInt(), StartAlpha, and TableSize.

Referenced by LearnEmbeddings().