Graph Information Bottleneck

We introduce Graph Information Bottleneck (GIB), an information-theoretic principle that learns robust representation for graphs. Built upon the principle, we propose two GNN models GIB-Cat and GIB-Bern that achieves state-of-the-art performance against adversarial attacks on structure or node features of graph data.

Method

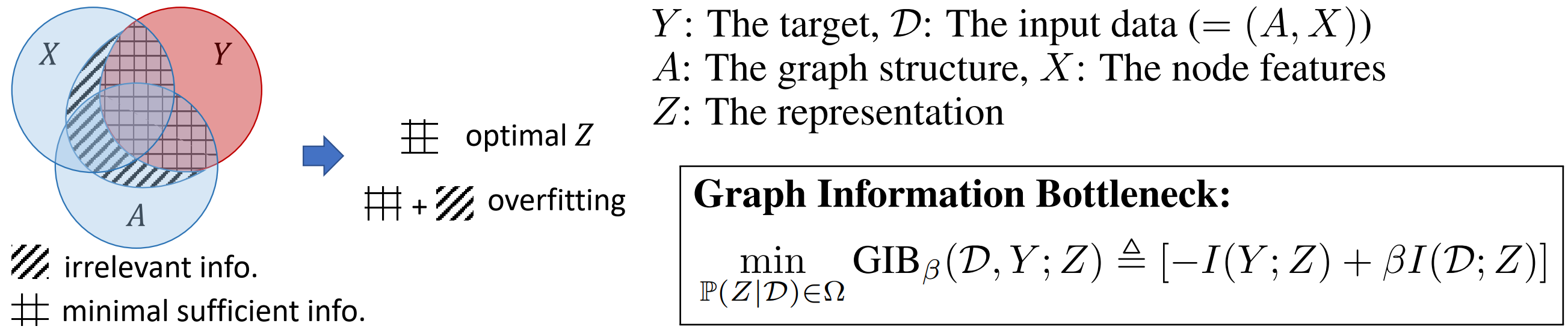

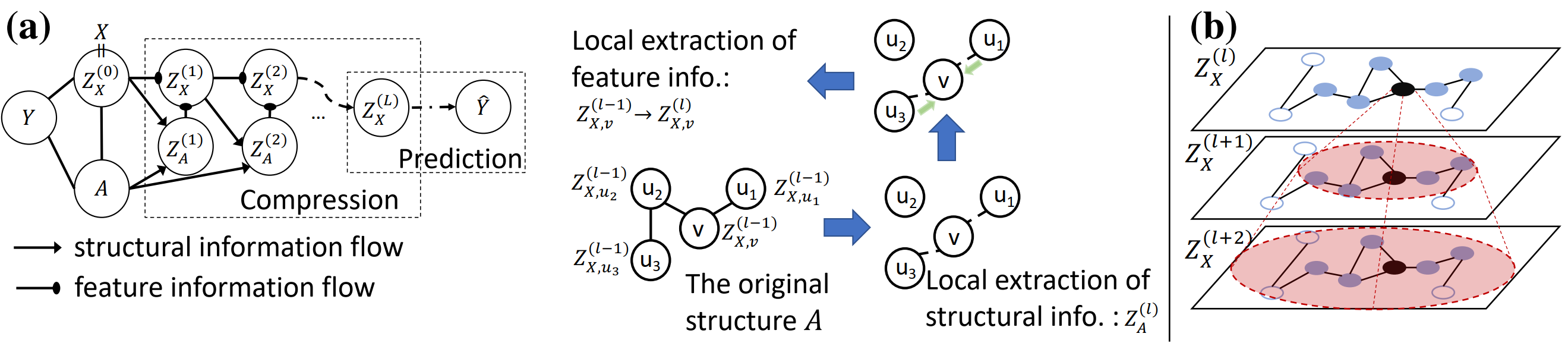

Representation learning on graphs with graph neural networks (GNNs) is a challenging task. Previous work has shown that GNNs are susceptible to adversarial attack. We here introduce Graph Information Bottleneck (GIB), which learns representation that is maximally informative about the target to predict while using minimal sufficient information of the input data. Concretely, the GIB principle regularizes the representation of the node features as well as the graph structure so that it increases the robustness of GNNs.

The algorithm iteratively extracts the representation of adjacency matrix (A) and the nodes (X) in each layer based on the local dependency assumption. For the modeling of the graph structure, we instantiate the GIB principle with two neighbor sampling algorithm, one using the categorical distribution: GIB-Cat, the other using the Bernoulli distribution: GIB-Bern, to model the neighbors under a local dependence.

We further derive variational bounds for the mutual information maximization and minimization, and optimize both models.

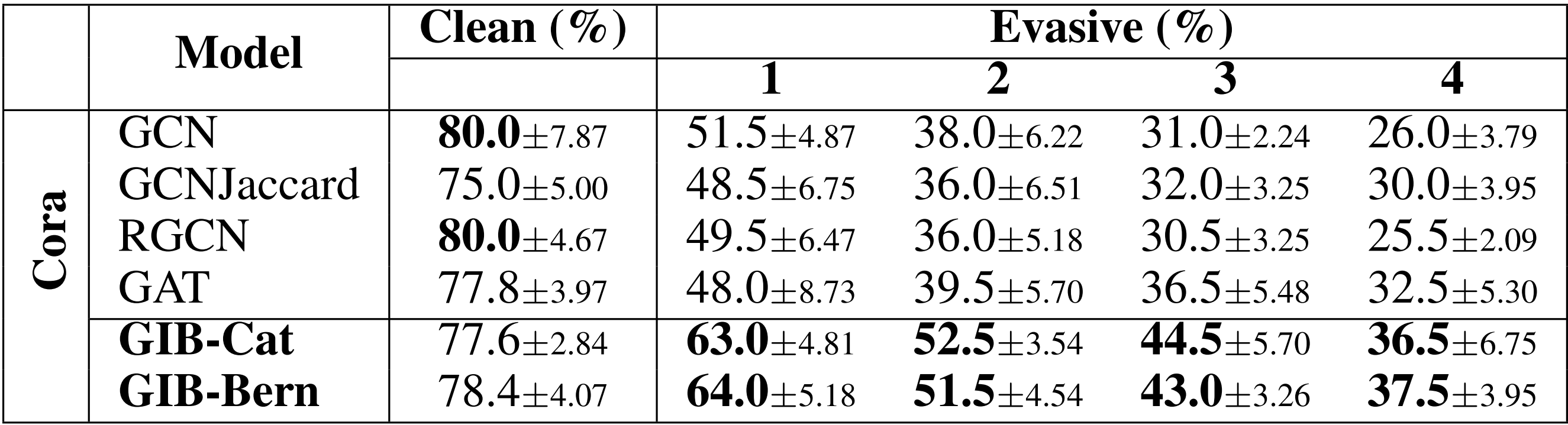

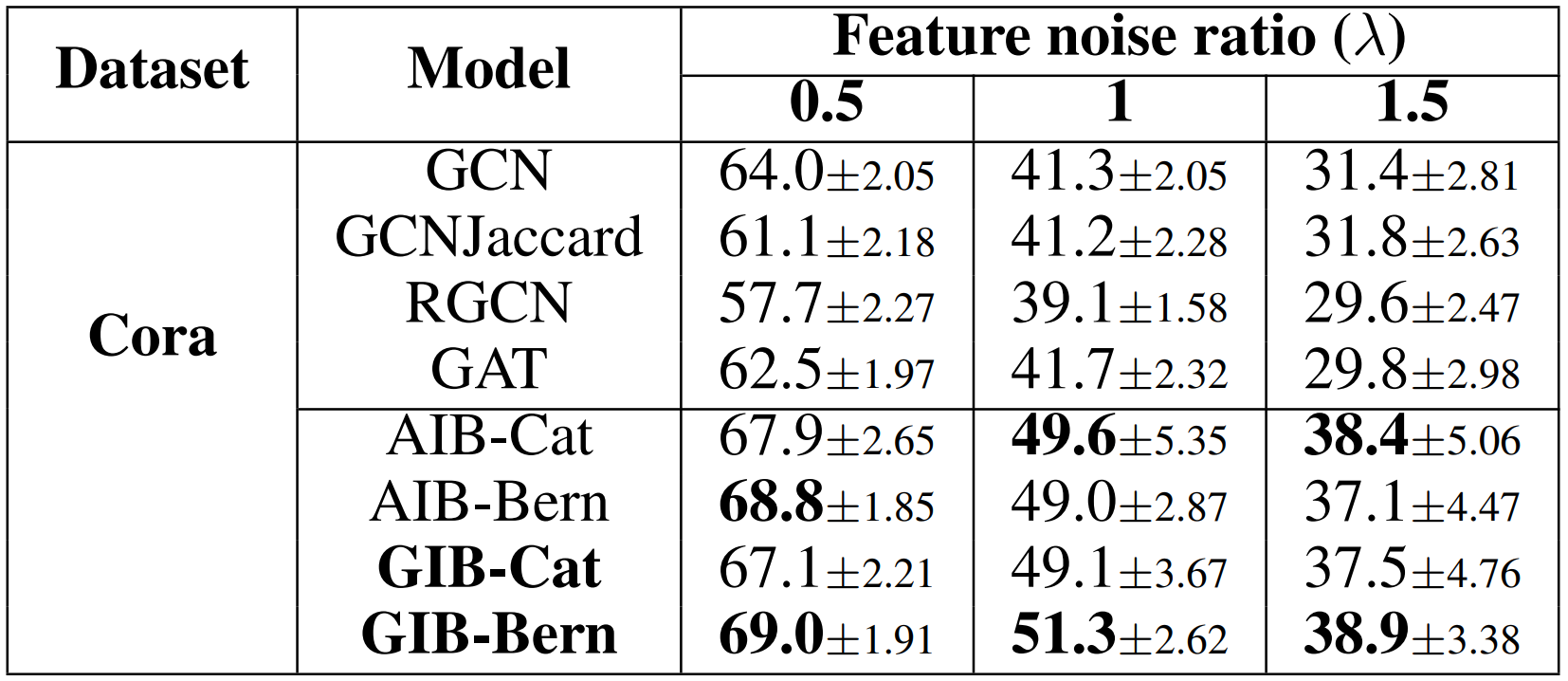

Experimental results show that GIB achieves more robustness than previous defence models against attacks on both graph structure and node features. We list both results on Cora and more results can be found in our paper.

Code

A reference implementation of GIB-Cat and GIB-Bern in PyTorch is available on GitHub.Datasets

The datasets used by GIB are included and automatically downloaded in the code repository.Contributors

The following people contributed to GIB:Tailin Wu*

Hongyu Ren*

Pan Li

Jure Leskovec