|

SNAP Library 4.0, Developer Reference

2017-07-27 13:18:06

SNAP, a general purpose, high performance system for analysis and manipulation of large networks

|

|

SNAP Library 4.0, Developer Reference

2017-07-27 13:18:06

SNAP, a general purpose, high performance system for analysis and manipulation of large networks

|

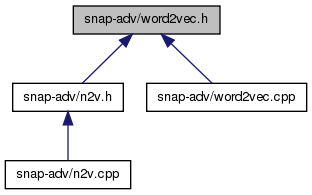

Go to the source code of this file.

Functions | |

| void | LearnEmbeddings (TVVec< TInt, int64 > &WalksVV, int &Dimensions, int &WinSize, int &Iter, bool &Verbose, TIntFltVH &EmbeddingsHV) |

| Learns embeddings using SGD, Skip-gram with negative sampling. More... | |

Variables | |

| const int | MaxExp = 6 |

| const int | ExpTablePrecision = 10000 |

| const int | TableSize = MaxExp*ExpTablePrecision*2 |

| const int | NegSamN = 5 |

| const double | StartAlpha = 0.025 |

| void LearnEmbeddings | ( | TVVec< TInt, int64 > & | WalksVV, |

| int & | Dimensions, | ||

| int & | WinSize, | ||

| int & | Iter, | ||

| bool & | Verbose, | ||

| TIntFltVH & | EmbeddingsHV | ||

| ) |

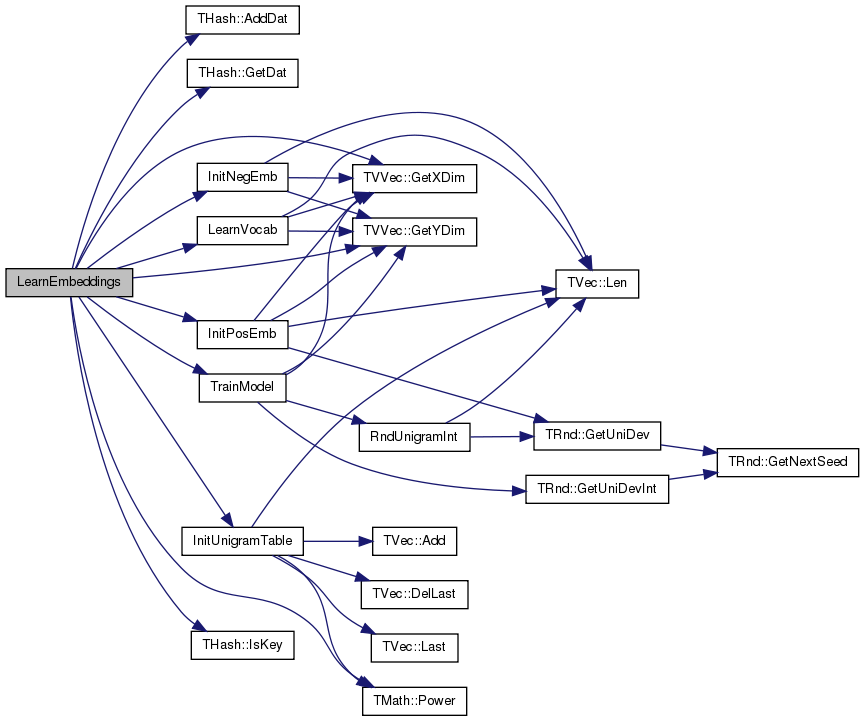

Learns embeddings using SGD, Skip-gram with negative sampling.

Definition at line 148 of file word2vec.cpp.

References THash< TKey, TDat, THashFunc >::AddDat(), TMath::E, ExpTablePrecision, THash< TKey, TDat, THashFunc >::GetDat(), TVVec< TVal, TSizeTy >::GetXDim(), TVVec< TVal, TSizeTy >::GetYDim(), InitNegEmb(), InitPosEmb(), InitUnigramTable(), THash< TKey, TDat, THashFunc >::IsKey(), LearnVocab(), MaxExp, TMath::Power(), StartAlpha, TableSize, and TrainModel().

Referenced by node2vec().

| const int ExpTablePrecision = 10000 |

Definition at line 12 of file word2vec.h.

Referenced by LearnEmbeddings(), and TrainModel().

| const int MaxExp = 6 |

Definition at line 9 of file word2vec.h.

Referenced by LearnEmbeddings(), LogSumExp(), TrainModel(), TMAGFitBern::UpdateApxPhiMI(), TMAGFitBern::UpdatePhi(), and TMAGFitBern::UpdatePhiMI().

| const int NegSamN = 5 |

Definition at line 16 of file word2vec.h.

Referenced by TrainModel().

| const double StartAlpha = 0.025 |

Definition at line 19 of file word2vec.h.

Referenced by LearnEmbeddings(), and TrainModel().

| const int TableSize = MaxExp*ExpTablePrecision*2 |

Definition at line 13 of file word2vec.h.

Referenced by LearnEmbeddings(), and TrainModel().